Imagine being denied a loan or misdiagnosed by a medical AI — and no one, not even the developers, can explain why it happened. For years, AI systems have operated behind closed doors: powerful but opaque, trusted but misunderstood. The term “black box” has become shorthand for the growing unease around artificial intelligence that can’t justify itself.

This is where Explainable AI — or XAI — steps in. It promises transparency, accountability, and trust in systems that once demanded blind faith. But beneath the surface, the field is in flux. While regulators demand it, some engineers doubt it. Users want it, but rarely know what kind of explanation they’re actually getting. And despite the hype, the most popular XAI techniques still leave major gaps in clarity and understanding.

So what does explainable AI really offer? Who is it for? And more urgently — can it actually deliver on its promises?

Why the World Is Demanding Explainable AI — Now

The push for XAI didn’t emerge from academia alone. It erupted from frustration, failure, and fear — particularly as AI crept into high-stakes decisions across finance, healthcare, criminal justice, and hiring.

Globally, 65% of organizations now cite “lack of explainability” as the biggest barrier to AI adoption — outpacing even cost and complexity. In the U.S., the FDA has made explainability a requirement for AI-enabled medical devices. The EU’s AI Act has gone even further, mandating traceability, auditability, and justification for all high-risk systems. In China, major cities like Shanghai now legally require XAI in public services. These are not fringe trends — they are now defining the rules of AI development worldwide.

Behind these legal shifts lies a deeper concern: accountability. AI is no longer confined to predicting spam or optimizing ads. It is helping diagnose patients, decide parole outcomes, and filter job applications. When systems fail — and they do — explanations aren’t just helpful. They’re essential for fairness, legal defense, and user trust.

At the same time, the explainability movement faces growing scrutiny. On Reddit and in conference hallways, engineers debate whether XAI really helps users — or just provides “plausible-sounding” comfort. There are calls for inherently interpretable models over black-box systems with post-hoc bandages. Some even argue that too much faith in explanations creates new risks — overtrust, lazy oversight, and ethical blind spots.

Still, the momentum is clear. Gartner predicts that by 2025, 75% of organizations will move away from opaque models toward explainable systems — not just to comply with the law, but to secure public trust and market advantage.

Let’s break down what explainable AI really is, how it works, and why so many are betting the future of AI on its success.

What Exactly Is Explainable AI (XAI)?

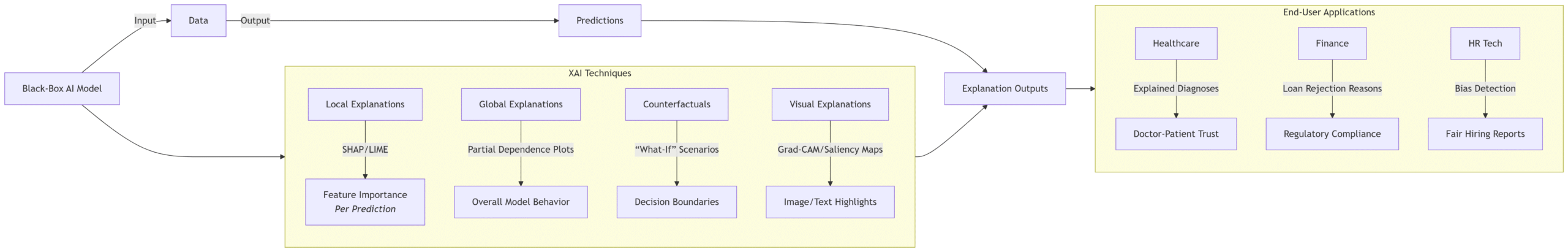

Image Credit: GazeOn

Explainable AI (XAI) refers to methods and tools designed to help humans understand how an AI system makes decisions. Unlike traditional “black box” models, which may produce highly accurate predictions with no insight into why they work, XAI aims to make those decisions transparent, interpretable, and—crucially—actionable.

There are two primary goals of XAI:

-

Interpretability: Enabling users (technical or not) to grasp what the model is doing and why.

-

Accountability: Making it possible to audit, justify, or challenge AI decisions—especially in high-risk settings.

But here’s the nuance: XAI isn’t a single method or silver bullet. It’s an umbrella term for a range of techniques, frameworks, and strategies designed to fit different models, audiences, and use cases. It’s both a technical domain and a human-centered one.

Intrinsic vs. Post-Hoc Explainability (Mini Explainer)

Intrinsic explainability comes from models that are naturally interpretable, like decision trees or linear regression. You can see exactly how inputs lead to outputs—no additional tools required.

Post-hoc explainability is applied after the fact to opaque models, like deep neural networks. These models might achieve state-of-the-art performance but need add-on tools—like LIME or SHAP—to approximate what’s going on inside.

The trade-off? Intrinsic methods are often easier to understand but may not scale to complex tasks. Post-hoc methods work with cutting-edge models but can be misleading or oversimplified.

How Explainable AI Actually Works

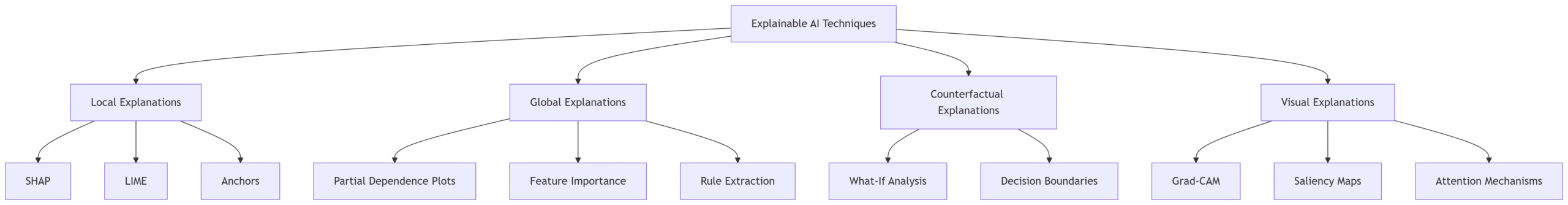

Image Credit: GazeOn

There are several widely used techniques to implement explainability in modern AI systems. Each comes with its own advantages, audiences, and use-case fit. 1. Feature Importance

Tools like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) measure which input features most influenced a specific prediction. For example, SHAP might show that a loan rejection was 60% influenced by credit score and 25% by income history.

-

Strength: Provides a ranked list of influential features.

-

Weakness: Doesn’t show interactions or full logic flow.

2. Counterfactual Explanations

These show what would need to change to alter a decision. For instance, “If the applicant’s income were $5,000 higher, the loan would have been approved.”

-

Strength: Highly intuitive for users.

-

Weakness: May not always produce actionable advice.

3. Visualization Techniques

Especially in computer vision, tools like Grad-CAM (Gradient-weighted Class Activation Mapping) highlight which parts of an image influenced the model’s classification.

-

Strength: Great for visual domains like medical imaging.

-

Weakness: Less useful for tabular or text-based data.

4. Surrogate Models

These create simpler, interpretable models (like decision trees) to mimic a complex model’s behavior locally around a given prediction.

-

Strength: Offers a digestible proxy.

-

Weakness: Only approximates the original logic.

5. Model-Agnostic Explanation Frameworks

These frameworks work with any black-box model. LIME, SHAP, and ELI5 fall into this category, providing generalized interpretability for developers and regulators alike.

Still, even the best tools have blind spots. In 2023, Meta’s “Beyond Post-Hoc” study found that leading techniques could only explain about 40% of a model’s actual behavior in complex decisions. That leaves room for false confidence—where the explanation sounds plausible but doesn’t capture the model’s true internal reasoning.

Why Explainability Isn’t Just a “Nice-to-Have”

Explainable AI is not just a debugging tool. It’s a trust layer. According to the Stanford AI Index (2023), over 65% of global organizations cited lack of explainability as the top reason they’ve stalled or limited AI adoption.

In regulated industries, XAI is now a survival skill. Banks must show regulators how automated decisions are made. Hospitals must explain how AI-derived diagnoses were reached. And consumer tech platforms—from TikTok to LinkedIn—are under pressure to clarify how algorithms shape feeds, rankings, and recommendations.

But the deeper issue isn’t just compliance. It’s human trust. As AI becomes more autonomous, users—whether patients, judges, or everyday consumers—need to understand not just what an algorithm is doing, but why it chose what it did.

From Hospitals to Hedge Funds: Where XAI Is Already Shaping Decisions

Explainable AI is no longer theoretical. It’s being quietly embedded into mission-critical systems across healthcare, finance, manufacturing, and beyond — often under legal pressure, but increasingly as a strategic advantage.

Healthcare: Explaining Life-and-Death Decisions

AI is now diagnosing disease, reading X-rays, and recommending treatments. But doctors and regulators won’t accept blind automation. Enter XAI.

-

In 2023, the FDA approved 223 AI-enabled medical devices in the U.S. — up from just six in 2015. Many approvals now require explainability features to support clinical transparency and informed consent.

-

Japanese hospitals piloted systems built by Fujitsu and Hokkaido University that explain diagnostic outputs in step-by-step formats for both doctors and patients — a move that increased trust and adoption.

-

Still, some researchers reported frustration. In one lab, attempts to interpret deep learning outputs produced explanations “barely better than random guessing,” prompting researchers to abandon the model entirely.

Finance & Insurance: XAI for Compliance and Risk Reduction

In finance, algorithms already decide who gets credit, who’s flagged for fraud, and what stocks to buy. Without explainability, these decisions are legally and ethically fraught.

-

EU financial regulators now require algorithmic transparency in banking and insurance, especially when automated systems impact access to loans or pricing.

-

In 2024, 44% of European finance firms ranked explainability as a top concern for AI adoption — ahead of speed or cost, according to the Stanford AI Index.

-

U.S. institutions are feeling similar pressure. The FTC has launched investigations into AI hiring tools and credit scoring systems that can’t provide human-understandable justifications for decisions.

Real-world win: Some major banks now use SHAP and LIME to auto-generate explanations for loan decisions — reducing compliance risk and improving customer satisfaction.

Manufacturing & Retail: XAI in the Supply Chain

The world of logistics and production is also getting a transparency upgrade.

-

Manufacturers in North America and Germany are applying XAI to automated quality control and supply chain optimization — areas once dominated by rigid rule-based systems.

-

These systems explain why certain shipments were prioritized or why defects were flagged, helping managers troubleshoot and refine workflows.

-

In retail, XAI is improving recommendation engines by giving customers insight into why products are being surfaced — enhancing trust and clickthrough rates.

Caveat: While adoption is accelerating, most use cases remain internal. Very few companies expose their XAI layers to consumers — a signal that true “glass box” systems are still rare.

Education & Public Services: XAI in Human-Centered Design

In public systems, trust isn’t optional — it’s mission-critical.

-

One Reddit user described a thesis project that added an “Explain” button to an educational chatbot for computer science students. The feature showed graphs of input/output pathways and boosted student confidence — but also raised concerns about oversimplification.

-

In Beijing and Shanghai, local governments now mandate XAI for public-facing AI in healthcare, social services, and transportation — part of China’s broader push for algorithmic transparency.

-

UX researchers stress that explanations must be audience-specific. A model explanation that satisfies a developer might overwhelm a student or confuse a teacher.

Enterprise Mandates: Big Companies Are Betting on XAI

-

96% of executives at enterprises with over 1,000 employees said they are required to explore or implement XAI by 2025.

-

52% plan to increase their AI budgets by 25–50%, with explainability cited as a core reason.

-

In sectors like human resources, XAI is becoming a differentiator. AI tools that rank resumes or recommend hires now come under scrutiny not just for accuracy — but for transparency.

Summary Table: XAI Adoption by Sector

| Sector | XAI Use Cases | Mandates / Stats |

|---|---|---|

| Healthcare | Diagnostic AI, treatment recommendations | FDA-approved devices now require explainability. 223+ devices cleared in 2023 alone. |

| Finance | Credit scoring, fraud detection, investment models | EU + U.S. regulators require transparency in automated decisions. |

| Manufacturing | Supply chain AI, quality control, logistics optimization | German and U.S. firms deploying XAI for operational clarity. |

| Retail | Recommendation engines, dynamic pricing | Some retailers using XAI to improve customer trust and engagement. |

| Education | Tutoring bots, exam grading assistants | Student-facing XAI piloted in academia; feedback is positive but mixed. |

| Public Sector | Transportation, social services, health registries | Chinese cities now mandate XAI for government-facing AI platforms. |

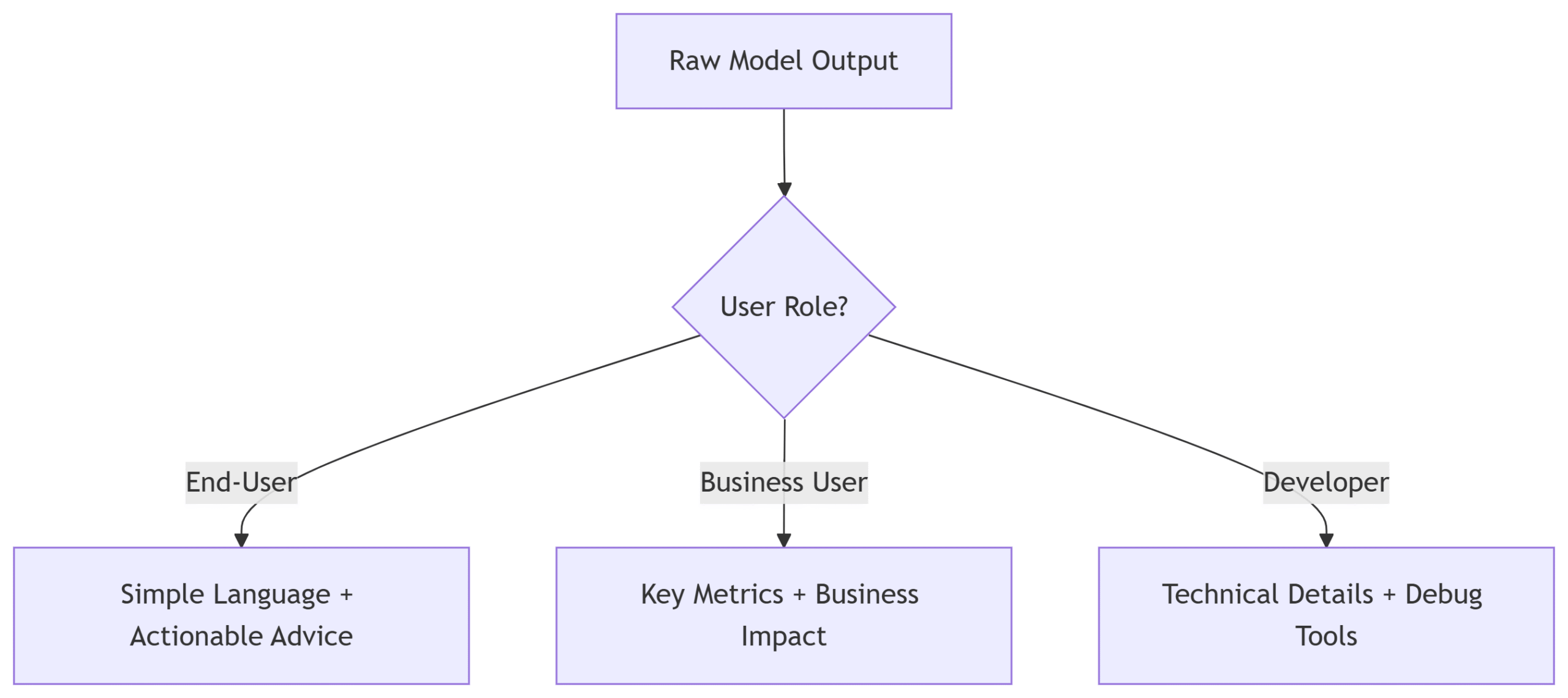

Image Credit: GazeOn

Confessions, Confusion, and Contradictions: The XAI Backlash

Explainable AI is marketed as the cure for black-box systems. But in practice, it often delivers murkier answers than promised. From forum skeptics to frontline engineers, a new question is surfacing: Are we explaining AI — or just comforting ourselves with plausible stories?

“Barely Better Than Random”: Reddit Threads Reveal Disillusionment

In one r/MachineLearning thread, a user recounted their lab’s frustration with XAI methods. Integrating interpretable components into a deep model tanked its performance. Post-hoc explanation tools? “Barely better than random guessing.” The team abandoned the project entirely.

Another thread debated whether current XAI methods actually help users understand anything. Some posters called SHAP outputs “mathematically satisfying but practically useless” for everyday users.

“Most of these ‘explanations’ aren’t explanations at all — they’re just plausible justifications that sound technical enough to satisfy a regulator.”

— Reddit user, r/ArtificialIntelligence

This sentiment isn’t isolated. UX researchers in a 2023 IBM study found that most users don’t want technical explanations. They want actionable insight or confidence, especially when outputs are unexpected. And when explanations fail to provide that, frustration spikes.

Myth #1: “AI Is a Black Box, Always”

Not true. While deep learning models are often opaque, models like decision trees, linear regression, and even some rule-based NLP systems are inherently interpretable.

XAI tools like LIME, SHAP, and Captum have bridged part of the gap — but only partially. Gartner still predicts that by 2025, 75% of organizations will shift toward explainable systems. Why? Trust and compliance are now table stakes.

Still, some experts warn: transparency ≠ understanding. You can explain what features drove a model’s decision without ever grasping the logic behind it.

Myth #2: “Explainability Means Accuracy Drops”

This one’s complicated.

Some researchers argue that pushing for explainability forces the use of simpler models — sometimes at the cost of performance. In fields like finance or medicine, that trade-off could be dangerous.

But others counter that post-hoc tools allow high-performing black-box models to remain competitive while adding transparency.

“Explainable AI can also take commonly held beliefs and prove that the data does not back it up.”

— Bernard Marr, Futurist & Author, Forbes

Still, caution remains. Meta’s 2023 study, Beyond Post-hoc Explanations, showed that even leading techniques explain only 40% of model behavior in complex tasks.

Myth #3: “All Explanations Are Equal”

Far from it. What makes sense to a machine learning engineer may confuse — or mislead — a layperson.

-

SHAP values can tell you how much a feature contributed to a prediction. But most users won’t know what a SHAP value even is.

-

In UX tests, over-detailed explanations actually led to reduced user trust, as users felt overwhelmed or suspicious.

-

Some public XAI tools have even been gamed — producing explanations that mask biased decisions with technically “plausible” language.

The insight: XAI must be audience-aware. Developers, regulators, and end users need different kinds of clarity.

Myth #4: “Explainable AI Is Always Fair AI”

Absolutely not. XAI can describe why a biased model produced a biased result — but that doesn’t fix the underlying bias. Worse, plausible-sounding explanations may make unfair decisions seem justified.

“In the gap between capability and explainability lies the true frontier of AI risk. Imagine a healthcare AI recommending an unconventional treatment without explanation, or a financial algorithm denying your loan application with no justification. Would you trust them? This question lies at the heart of AI’s greatest challenge today: the need for explainable AI that can help us understand how and why machines make the decisions they make.”

— Nitor Infotech, 2025

This has sparked a contrarian push among some researchers: shift focus from explainability to robustness — emphasize model monitoring, outcome audits, and ethical guardrails over “explanations” that can be selectively designed or manipulated.

Myth #5: “Explainability = Transparency”

Even when tools like LIME or Grad-CAM are used, they don’t open a model’s entire decision-making logic. They approximate it. And approximations can distort as much as they clarify.

This leads to a dangerous dynamic: If regulators, users, or product managers treat XAI outputs as gospel, they may miss deeper issues — like model drift, data leakage, or unintended correlations.

Contrarian Take: Explainability Can Be a Mirage

Some experts now argue that the entire XAI movement risks turning into a compliance checkbox. By providing any explanation — even one that oversimplifies or distorts — companies may meet legal requirements without delivering real transparency.

“Explainability should not be a license to use opaque models. It should be a call to rethink what kinds of models we use in the first place.”

— UX researcher, r/UXResearch

This is why some now advocate for “glass-box-first” policies: use inherently interpretable models unless absolutely necessary. And if black-box systems must be used, supplement them with independent oversight, human-in-the-loop systems, and outcome audits — not just SHAP plots.

What’s Coming for XAI: Regulation, Innovation, and a Shifting Trust Landscape

XAI is no longer a research niche or compliance afterthought. It’s evolving fast — shaped by global legislation, rising public concern, and the urgent need for trustworthy, auditable AI systems.

So what’s on the horizon for explainability?

Regulation Is Redefining the Rules of AI

🇪🇺 Europe: The EU AI Act Sets the Global Tone

In August 2024, the EU AI Act entered into force, with sweeping requirements for high-risk AI systems. By mid-2025:

-

AI systems used in healthcare, finance, and critical infrastructure must offer human-readable explanations.

-

Organizations must maintain audit trails, demonstrate risk mitigation, and provide real-time justification for AI outputs.

-

Non-compliance penalties? Up to €35 million or 7% of global revenue.

XAI implication: Transparency and traceability are no longer optional. Even companies outside the EU must comply if they serve EU customers.

🇺🇸 United States: From Executive Orders to Federal Law

In late 2023, the Biden administration issued an Executive Order mandating AI fairness, transparency, and accountability. The upcoming Federal AI Act will require:

-

Mandatory AI audits for high-impact systems

-

Stricter bias detection and redress mechanisms

-

Clear justification of algorithmic decisions

The FTC is already enforcing these standards — cracking down on deceptive black-box systems in hiring, credit scoring, and insurance.

🇨🇳 China: Algorithm Labeling and Public-Facing XAI

As of September 2025:

-

All AI-generated content must be labeled as such

-

Companies must register algorithms and explain how they operate in public-facing services

-

Transparency is now required in healthcare, transportation, and education

Global Insight: These shifts mean companies deploying AI globally must navigate a complex, fast-evolving regulatory minefield. Explainability isn’t just best practice — it’s increasingly a legal mandate.

Toolkits and Frameworks Are Evolving — Fast

A new wave of tools is making XAI easier to deploy and scale:

-

SHAP, LIME, Captum: Still the go-to tools for feature attribution and post-hoc analysis.

-

IBM’s AI FactSheets and Google’s Model Cards: Offer documentation standards that help translate black-box logic into human-understandable formats.

-

Counterfactual AI: Gaining traction in customer service and decision support systems for its intuitive, “what-if” explanations.

More advanced research is moving into:

-

Causal inference for explanation (explaining not just correlation, but cause)

-

Interactive XAI (where users can explore model logic dynamically)

-

Federated explainability (for distributed models like those used in healthtech and privacy-first AI)

XAI Is Becoming Real-Time and User-Tailored

The next generation of explainable AI isn’t just post-hoc. It’s interactive, audience-aware, and role-specific:

-

Doctors get clinical rationale.

-

Regulators get bias metrics and audit logs.

-

End-users get intuitive “why” answers — not math.

This shift toward contextual explainability is being driven by UX design teams, HCI researchers, and enterprise compliance officers who realize that a SHAP plot alone doesn’t build trust.

What Thought Leaders Predict

-

Gartner forecasts that organizations using transparent, explainable AI will achieve 30% higher ROI than those using black-box systems by 2025.

-

Meta’s researchers caution that post-hoc techniques still explain less than half of a model’s true behavior — so future systems must blend explainability with auditability and robustness.

-

Google CEO Sundar Pichai emphasized in a TIME interview: “The future of AI is not about replacing humans — it’s about augmenting them. And that means making AI’s logic accessible.”

What Forward-Looking Companies Are Doing Now

✔ Building XAI into product development pipelines — not as an add-on, but as a first-class feature.

✔ Creating internal explainability benchmarks across departments — legal, data science, UX, and customer support.

✔ Developing stakeholder-specific dashboards — to deliver real-time, relevant explanations depending on who’s asking and why.

✔ Designing for fail states — building XAI into error handling, red flags, and override systems when AI decisions appear risky or ambiguous.

Future-Proofing XAI: A Cross-Functional Mandate

The next wave of explainability won’t be driven by data scientists alone. It will require:

-

UX designers to make explanations digestible

-

Lawyers and ethicists to define what counts as “fair” or “sufficient”

-

Engineers to build models that balance complexity with interpretability

-

Business leaders to set the cultural tone around transparency and trust

And perhaps most critically: Organizations must be prepared to shift strategies as models, regulations, and social expectations evolve.

Frequently Asked Questions About Explainable AI (XAI)

1. What is explainable AI, and why does it matter?

Explainable AI (XAI) refers to methods that help humans understand how an AI model made its decision. It matters because AI now influences high-stakes outcomes — from approving mortgages to recommending cancer treatments. Without transparency, decisions can’t be audited or trusted. XAI adds accountability to systems that would otherwise act without justification.

2. How does explainable AI help build trust?

Trust in AI comes from understanding why it made a decision, not just what it decided. Tools like SHAP and LIME break down which features influenced an outcome. Counterfactuals show what changes would alter a result. This transparency gives users confidence — or a basis to challenge flawed outputs.

3. What are real-world examples of explainable AI in action?

-

Healthcare: FDA-approved diagnostic AI systems now require explainability for clinician trust.

-

Finance: European banks use SHAP to justify credit decisions and meet regulatory standards.

-

Education: Chatbots with “Explain” buttons let students trace how AI-generated answers are formed.

-

Retail: Recommendation engines now disclose logic like “Based on your recent views.”

4. What techniques are used to make AI explainable?

-

SHAP & LIME: Show which features influenced a model’s output.

-

Grad-CAM: Highlights image areas that drove a classification.

-

Counterfactuals: Show what would’ve changed the outcome.

-

Surrogate models: Simplify logic via approximations like decision trees.

5. Is explainable AI the same as ethical or fair AI?

No. XAI can describe how a model made a biased decision — but it can’t always fix the bias itself. Fairness requires better data, intentional design, and regular auditing. XAI supports accountability, but it’s not a substitute for ethical rigor.

6. Can explainability improve model performance?

It depends on how you define performance. In pure accuracy, interpretable models may lag behind complex ones. But in trust, compliance, and adoption — explainability boosts real-world effectiveness. Gartner predicts transparent AI systems will outperform opaque ones by 30% ROI.

7. Which industries rely most on explainable AI?

-

Healthcare (diagnosis and treatment recommendations)

-

Banking & Insurance (credit scoring, fraud detection)

-

Legal & Public Sector (eligibility decisions, sentencing tools)

-

Education, Retail, HR (student support, product recommendations, hiring AI)

Wherever AI affects human lives, explainability becomes essential.

8. What’s the difference between intrinsic and post-hoc explainability?

-

Intrinsic explainability is built into the model — like decision trees, where logic is visible by design.

-

Post-hoc methods apply to black-box models after training — using tools like LIME or SHAP to approximate the model’s decision logic.

Conclusion:

Explainable AI sounds like progress. And in some ways, it is. Engineers use it to debug. Regulators treat it as a safety valve. For users, it’s a flashlight in a dark tunnel. It dares to answer the question AI once ducked: “Why?”

But here’s the catch: those answers often raise more questions than they settle. Some explanations pacify. Some confuse. Others obscure the very logic they’re meant to reveal. And as AI grows more autonomous, understanding it becomes harder — just when we need it most.

We don’t need perfect transparency. But we need enough. Enough to spot bias. Enough to push back. Enough to trust — or say no.

Because let’s face it: explaining a bad decision doesn’t make it better.

That’s why the real shift isn’t in code — it’s in culture. The companies leading the way are treating explainability as a value, not a checkbox.

So, no — the point isn’t whether AI can explain. The point is whether we’re ready to listen.

KeyTakeaways

-

XAI is becoming a regulatory mandate, not just a technical feature. Europe, the U.S., and China are all enforcing transparency.

-

User trust now depends on explanation. People don’t just want outputs — they want reasons.

-

Explanation ≠ understanding. Most XAI tools simplify model logic, and sometimes oversimplify it.

-

Audience matters. A data scientist needs different clarity than a customer or a patient.

-

Explainability isn’t fairness. XAI can describe biased decisions without fixing them.

-

Smart firms are building for interpretability first, not retrofitting it later.

About Author:

Eli Grid is a technology journalist covering the intersection of artificial intelligence, policy, and innovation. With a background in computational linguistics and over a decade of experience reporting on AI research and global tech strategy, Eli is known for his investigative features and clear, data-informed analysis. His reporting bridges the gap between technical breakthroughs and their real-world implications bringing readers timely, insightful stories from the front lines of the AI revolution. Eli’s work has been featured in leading tech outlets and cited by academic and policy institutions worldwide.