Artificial intelligence in medicine was pure science fiction just a few years back. Today? It’s clocking in for regular shifts at hospitals, screening labs, and tribal clinics. Deep learning tools read mammograms across the UK. Predictive models flag sepsis at Johns Hopkins. AI isn’t some distant future anymore – it’s part of the everyday grind.

Still, the real story runs deeper. AI isn’t replacing doctors. It’s not some magic cure-all for healthcare’s problems. And it definitely hasn’t escaped old-school headaches like red tape, bias, or tight budgets. But where AI actually works, the results speak for themselves: fewer readmissions, earlier disease detection, and some relief for burned-out medical staff.

This breakdown cuts through the hype to show what’s really happening on hospital floors. Every case study, number, and insight here comes straight from verified, real-world practice. From UnityPoint’s 40% drop in readmissions to Estonia’s national AI-powered patient system, we’re looking at how AI is quietly reshaping medicine, one workflow at a time.

The Crisis That Made AI Essential

Healthcare didn’t adopt AI out of curiosity. It was desperation. Years of clinician burnout, endless paperwork, and skyrocketing costs forced health systems to try something beyond small fixes. That’s where AI found its opening.

Photo Credit: GazeOn

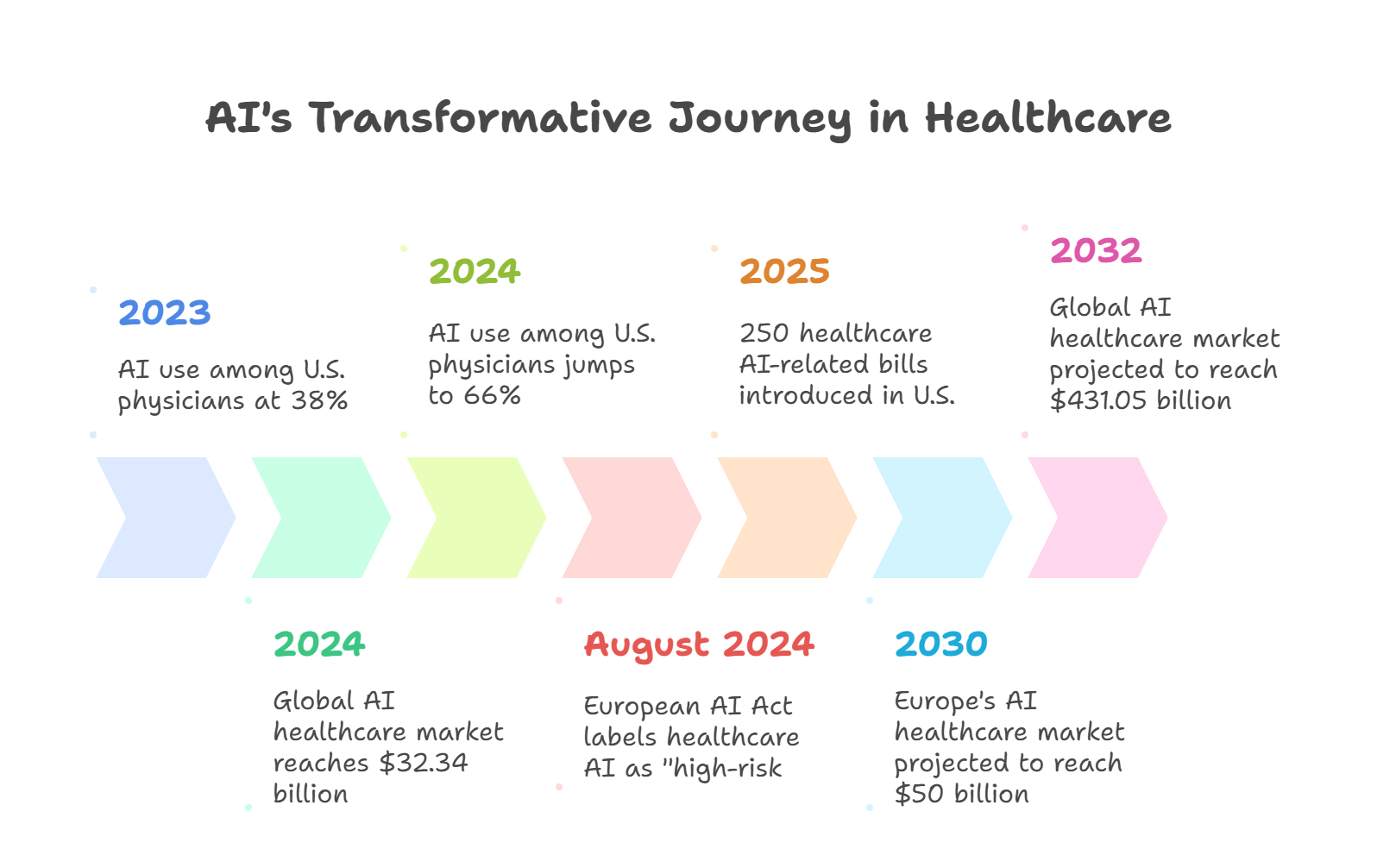

The numbers tell the story. By late 2024, the global AI healthcare market hit $32.34 billion, with projections shooting toward $431.05 billion by 2032, according to Global Market Insights via Docus.ai. North America controls 58.9% of the market share, while Asia-Pacific is growing at a 42.5% yearly rate. Europe expects to reach $50 billion by 2030.

Real-world adoption matches the growth. Over 80% of hospitals worldwide now use AI to improve workflow and patient outcomes, based on Deloitte’s 2024 Health Care Outlook. In the U.S., AI use among physicians jumped from 38% in 2023 to 66% in 2024, TempDev Blog reports.

Regulators are scrambling to keep up. Since August 2024, the European AI Act labels most healthcare AI tools as “high-risk,” requiring strict compliance. Meanwhile, U.S. states introduced 250 healthcare AI-related bills in 2025 alone, according to American Medical Association.

This isn’t a trend anymore. It’s a complete system overhaul.

What AI Actually Does in Today’s Medical Settings

Photo credit: GazeOn

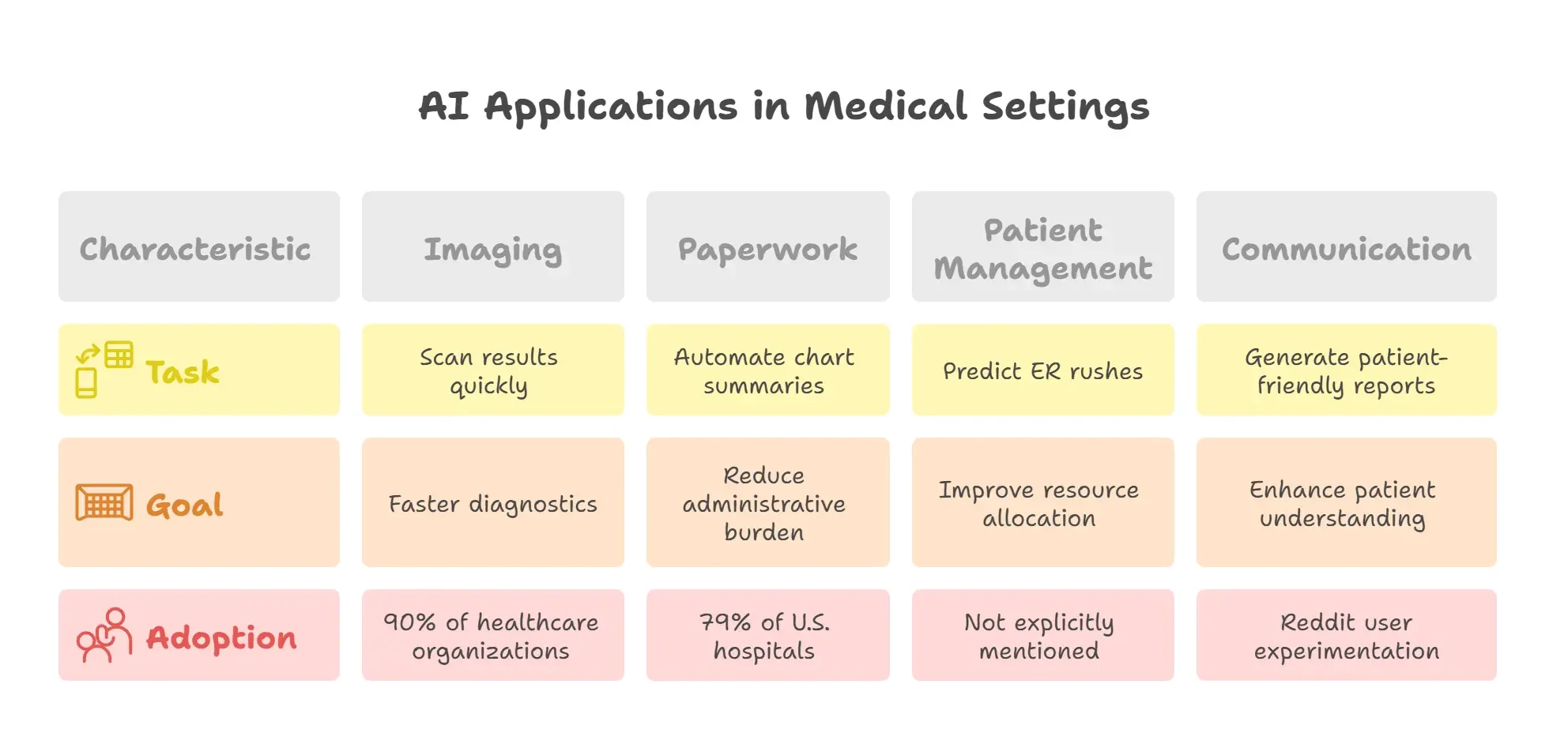

Forget the flashy presentations. Here’s what AI is really doing inside clinical settings right now:

It’s not about robot surgeons. It’s about practical tasks like flagging patients before they get worse, scanning imaging results at lightning speed, turning doctor notes into plain English, predicting ER rushes for better staffing, and automating chart summaries during patient visits.

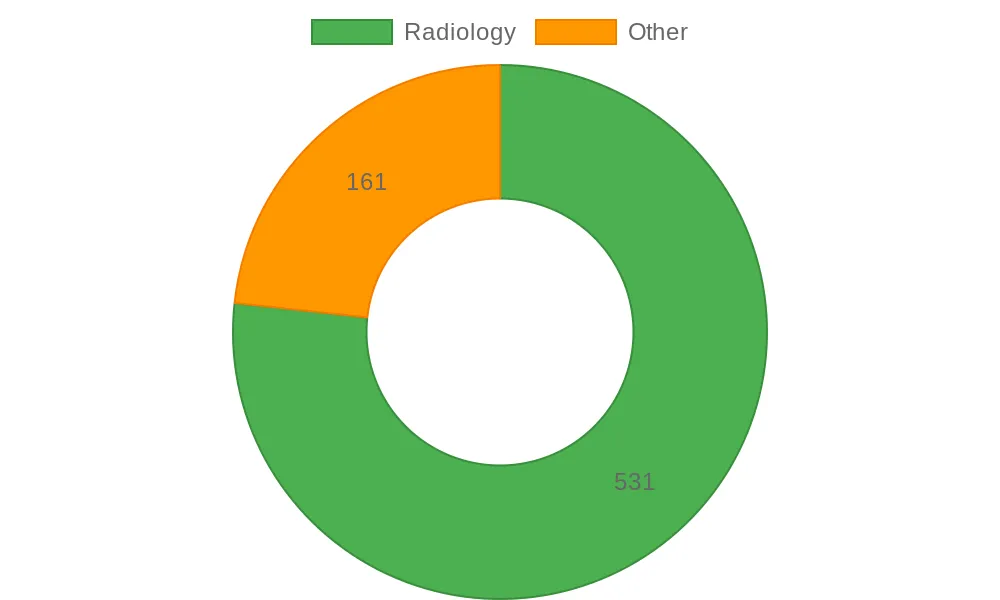

These aren’t pipe dreams. They’re regulated tools. The FDA has cleared 692 AI-enabled medical devices, with 531 focused on radiology – a clear sign of where the technology has gained real traction.

Photo Credit: GazeOn

Imaging leads the pack. 90% of healthcare organizations use AI in imaging, and more than half of providers rely on it daily for diagnostics. But AI isn’t just making scans faster. It’s handling the time-consuming paperwork that bogs down doctors. 79% of U.S. hospitals now use predictive models in their electronic health records to help with care decisions, discharge planning, and patient triage.

Doctors are taking notice. One Reddit user in r/medicine wrote:

“AI is not going to replace anyone in the medical field except maybe some clerical staff… I do envision AI being extensively utilized for consult communications, assisting with note-taking, and managing orders.”

Another shared:

“I am experimenting with its application in generating responses regarding medical reports, suitable for patients with little medical education.”

The bottom line? AI isn’t replacing clinicians. It’s soaking up the overflow – the administrative mess, the mental clutter, the repetitive busy work. And it’s doing all this quietly, behind the scenes.

When Reality Hits the Hype: Myths, Problems, and Truth

AI’s progress in healthcare comes with serious warnings. Not every tool actually helps, and not every clinician wants the change.

Photo Credit: GazeOn

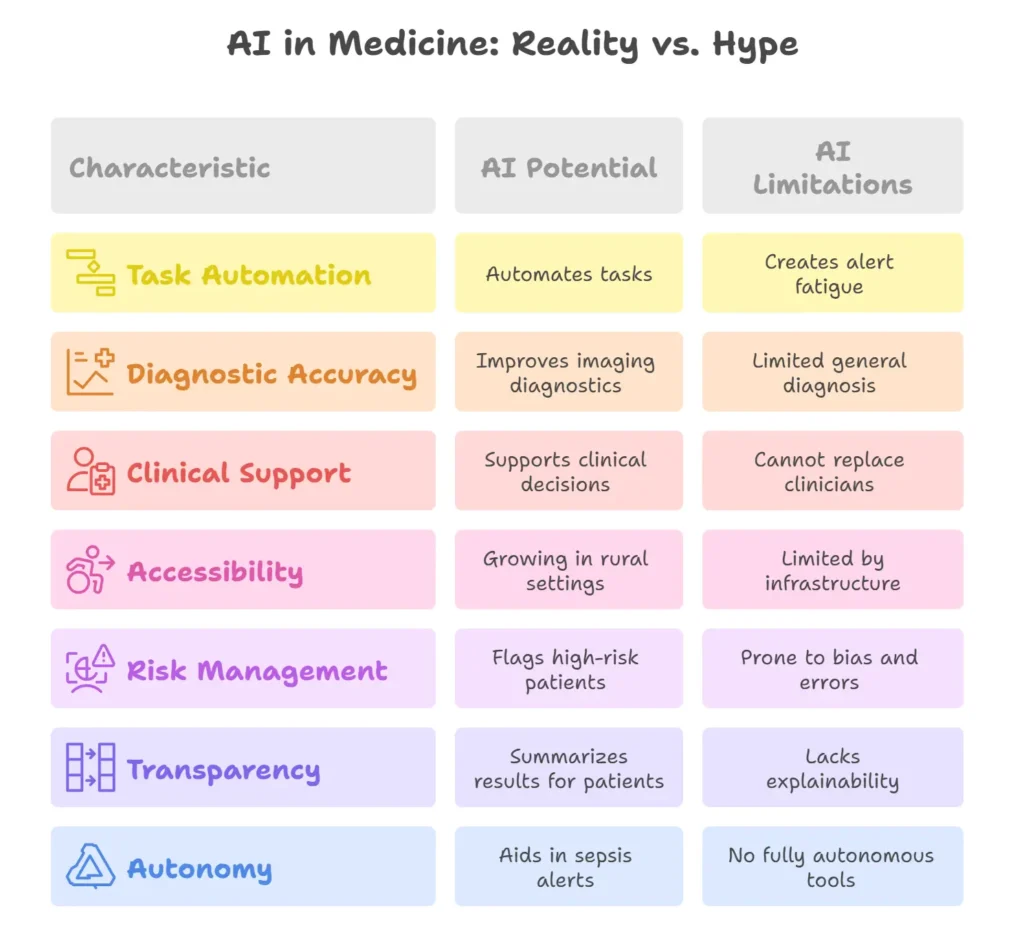

Let’s tackle the biggest myth first: that AI will replace doctors. The European Commission pushed back hard on this in 2025, saying AI exists to support human care, not replace it. It’s an extra layer of help, not an independent system.

Another false belief? That AI is always right. In 2025, the World Economic Forum flagged transcription errors in OpenAI’s Whisper, reminding everyone that even top models can make stuff up.

A physician on Reddit asked:

“If adequate and competent datasets are available for symptoms, signs and management of common and well studied diseases… what’s stopping AI from replacing or at least relieving physicians at Primary Healthcare Setups?”

The answer: messy reality. Pain complaints. Exhaustion. Multiple health conditions. Real medicine is complicated, and AI still can’t handle nuance well.

Then there’s bias. Dr. Adam Rodman told the Harvard Gazette: “Current data sets too often reflect societal biases that reinforce gaps in access and quality of care for disadvantaged groups… these data have the potential to cement existing biases into ever-more-powerful AI.”

Generative tools can also create alert fatigue. A 2024 PLOS ONE study by Razai and colleagues found that general practitioners complained about vague alerts and bloated reports that added more burden than benefit.

Doctors don’t hate technology – they hate friction. And sometimes, AI just creates another mess they have to clean up.

Real Success Stories: Where AI Makes a Difference

The most powerful AI stories often come from mid-size hospitals, tribal clinics, and government-led programs rather than big corporate rollouts.

UnityPoint Health used AI-powered predictive analytics to cut 30-day hospital readmissions by 40%. The system spots high-risk patients early, triggering targeted follow-ups and home care planning.

Johns Hopkins Hospital deployed an early-warning AI system for sepsis. It constantly analyzes vital signs, lab results, and notes to catch early warning signs. Results show patients monitored by the system are 20% less likely to die from sepsis.

Indian Health Service (IHS), covering over 600 facilities serving tribal communities, is rolling out AI to strengthen primary and emergency care. These models are designed specifically for Native American and Alaska Native needs, though full impact data is still being collected.

In the UK, the East Midlands Radiology Consortium (EMRAD) uses Mia™, a deep learning AI model from Kheiron Medical, to support breast cancer screening. It’s the first UK CE-marked AI radiology system and is already improving accuracy and efficiency.

Estonia built something even bigger. Their national health system features AI-powered infrastructure that integrates patient records, supports clinical decisions, and streamlines population health monitoring. The result? A more unified, accessible digital health backbone.

Each story proves the same point: when AI meets real need rather than just product ambition, it can shift outcomes in ways you can measure and feel.

The Roadblocks Still Slowing AI Down

Even with strong momentum, real barriers remain.

Data quality tops the list. Most training sets still have gaps – geographic, demographic, or economic – which skew outcomes and miss patient realities.

Explainability comes next. Physicians need to understand how a model reached its decision. Black-box models that don’t justify their outputs kill trust.

Access is another hurdle. Small clinics often lack the computing power or infrastructure to use AI tools effectively, even when they want to.

Policy is moving forward, but unevenly. The EU AI Act enforces strict rules, but U.S. efforts remain scattered across state lines.

Trust? Still a work in progress. As one Reddit user put it: “I’m okay with tools that help – but not ones that second-guess me without context. We don’t need more noise in the system.“

The next step in AI for healthcare won’t be about scale. It’ll be about getting the details right. Less friction, better alignment with what doctors actually need.

FAQs

How accurate is AI in diagnosing patients?

Most approved AI tools work best in narrow, well-defined clinical areas, particularly imaging. Radiology models now account for over 77% of FDA-approved AI tools. But general diagnosis is still limited by data gaps, symptom complexity, and context AI can’t always grasp.

Will AI replace doctors or nurses?

No. According to the European Commission, AI is designed to support medical professionals, not replace them. Clinicians remain essential, especially when patient needs involve subjective, multi-layered interpretation.

Is AI being used in rural hospitals or low-resource settings?

Yes, though with limitations. AI deployment is growing across tribal health systems like IHS and in countries like Estonia, where national infrastructure supports integration. But smaller clinics often lack the computing power or funding needed to fully adopt it.

What are the biggest risks of using AI in medicine?

Bias, lack of explainability, hallucinations in generative tools, and regulatory gaps are key concerns. Misdiagnosis due to flawed training data or blind reliance on opaque models can put patient safety at risk.

Can patients access AI-generated results directly?

In some systems, yes. AI-generated summaries are already being tested to improve patient understanding, especially in radiology and test results. However, final clinical interpretation still lies with the provider.

Are there any fully autonomous AI tools in healthcare?

No tool is fully autonomous in clinical care. All current deployments, including sepsis alerts, cancer screening tools, and EHR-integrated risk models, require oversight from licensed medical professionals.

The Bottom Line

The conversation around AI in healthcare is changing from what’s possible to what’s actually useful. Flashy claims are fading. What matters now is integration that doesn’t disrupt, oversight that doesn’t lag behind, and performance that reflects real clinical needs.

Whether it’s AI spotting tumors or flagging readmission risks, success depends not just on the technology but on how it’s governed, adopted, and trusted.

This next chapter won’t be about throwing more models into the workflow. It’ll be about removing friction, increasing transparency, and closing the gap between innovation and bedside reality.

In healthcare, AI doesn’t need to be louder. It needs to be smarter and quieter.